How to crawl and analyse blog Url’s in a flat URL structure ( WordPress)

So I had a new client who had a lot of blog post’s and I wanted to evaluate them from an SEO perspective. Unfortunately however they were using a flat URL structure so identifying each post was going to be a laborious task of opening each URL and copying it into an excel file and then checking each one in ahrefs, GA & GSC. I knew there must be a quicker way so I documented one.

I wanted to get the following data for each blog post.

- Amount of KW’s each page ranks for

- How much organic traffic they have received(GA)

- How many impressions they received (GSC)

Below is the process I followed to get this information ( This one is wordpress specific).

It is possible this could be achieved with something like semrush but I prefer to use SC(Screaming Frog) for technical audits and checking sites etc so a lot of the elements here can be applied across an ectire website.

I used the following tools

- Screaming Frog

- Google Analytics

- Google Search Console

- Ahrefs

If you are using Yoast you can go to /post-sitemap.xml and get your list of URLS and skip to Step 2, if you are not using yoast then follow from Step 1

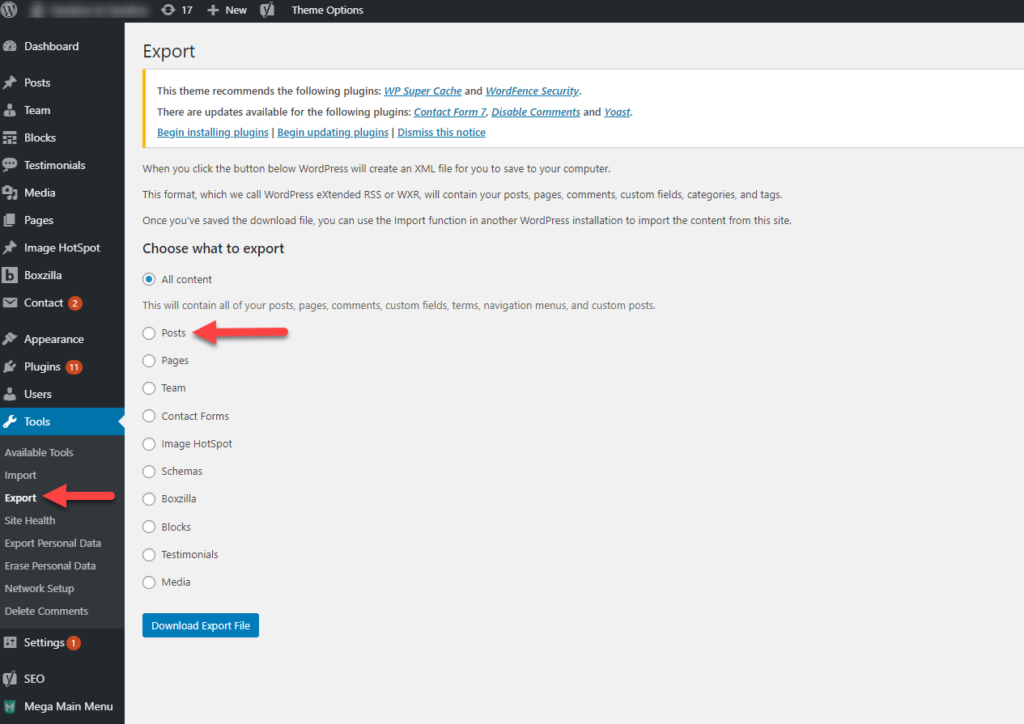

Step 1

In WordPress admin go to Tools and Export and select the post type that matches where the blog posts live, in this scenario they are all just “Posts”, click “download export file”

Open the downloaded xml file in your text editor of choice

This document looks scary because it could be thousands of lines long, however we only care about 1 element and that is anything between a **** tag.

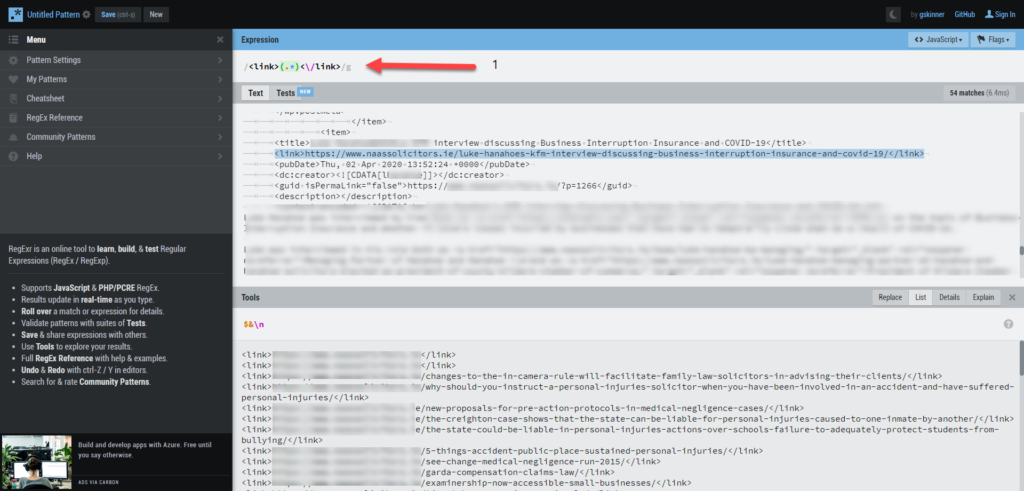

To do this copy the contents of your xml file and go to https://regexr.com/ .

First clear the text box under “Expression” leaving behind ” / “and ” /g” .

Then clear the text below.

Enter this expression in the Expression field (.*)<\/link> you should end up with /(.*)<\/link>/g .

Then paste in the contents of your xml file.

Next click on the “list” tab in the tools section below, this will reveal a nice tidy list of all the url’s you need.

Before proceeding use find and replace on your list to remove and from your URL list

Step 2

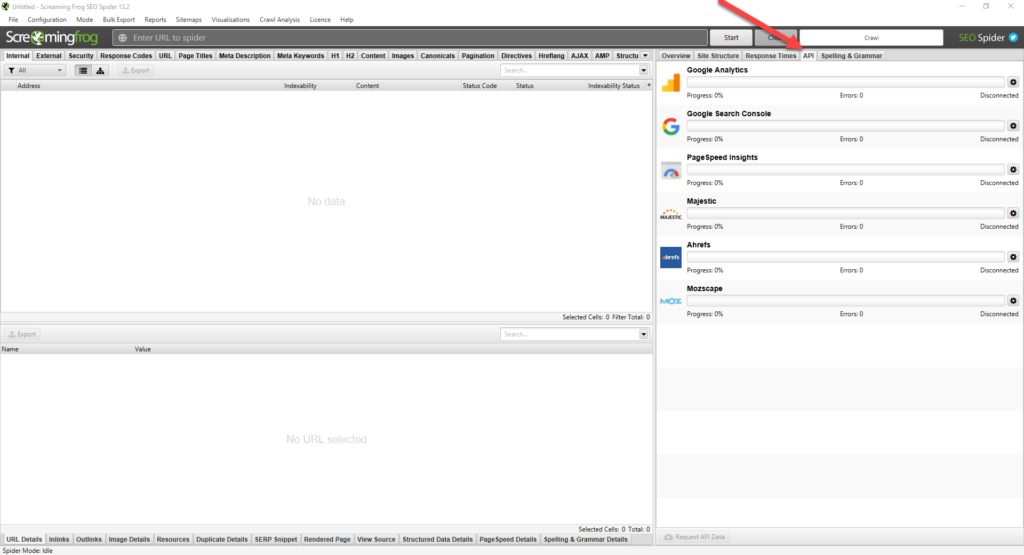

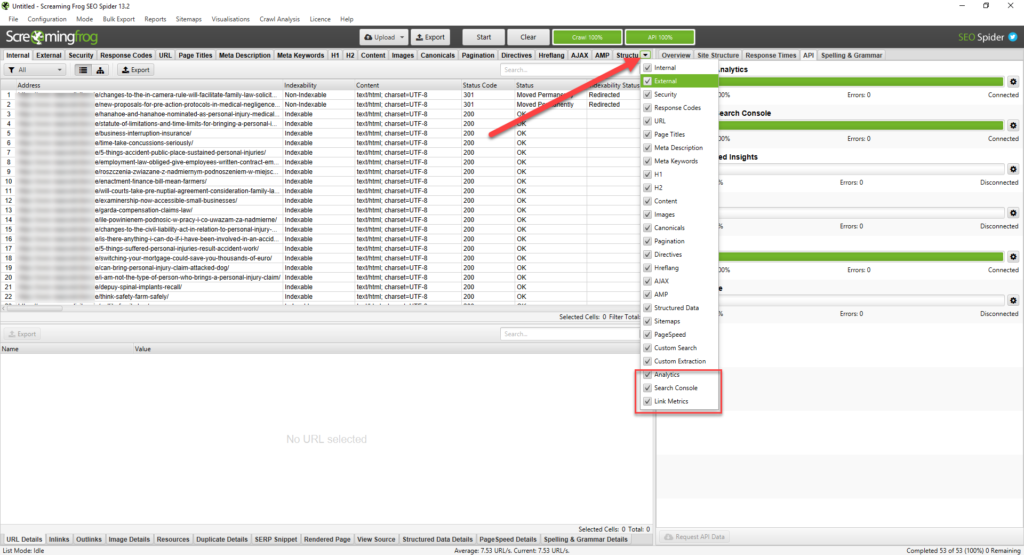

Open screaming frog and navigate to the API tab

Here you need to connect GA, GSC(Google Search Console) and ahrefs using the little cog icon on the right.

For GA set the following before finding your GA account

- Set the date range to 6, 12 or more month period (But use the same for GSC)

- Select only the session metric box in the Metrics tab

- Then find your GA account and select the organic traffic segment , hit OK

For GSC

- Set the date range to the same as you used in GA

- In the dimension filter specify the correct country

- Hit OK

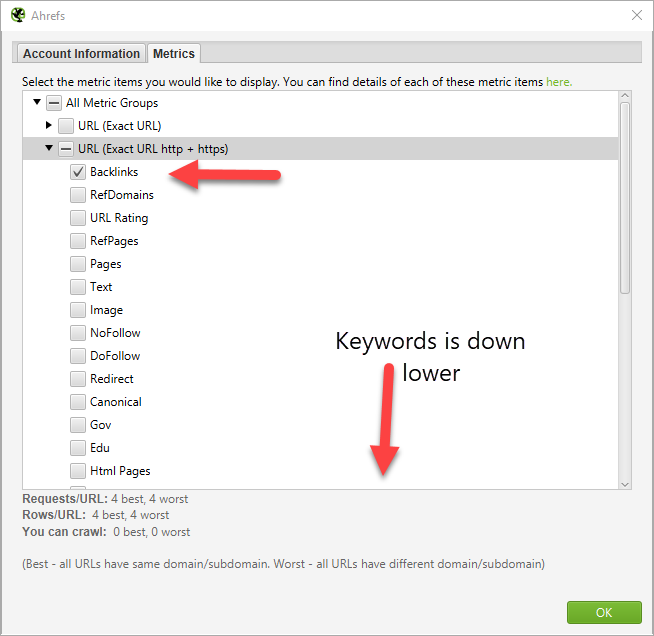

For Ahrefs

- Click the “Generate an API access token” link

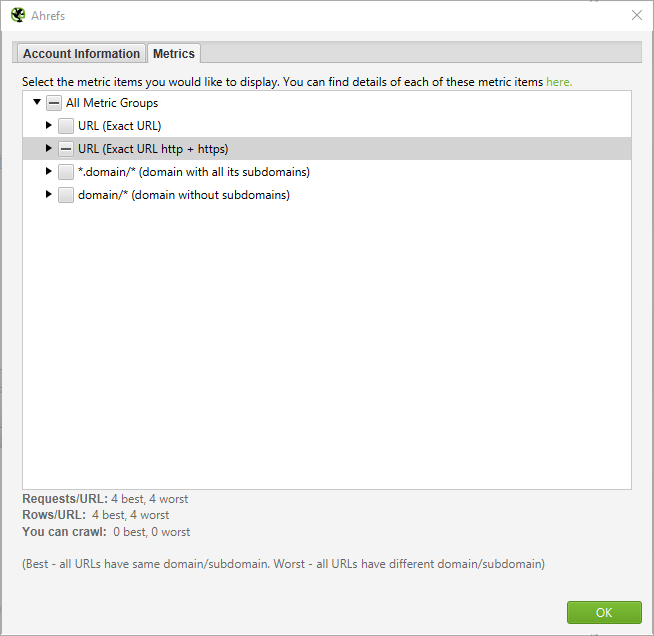

- Under metrics, dropdown on the second option “URL (Exact URL http & https)” and select the following options

- Backlinks

- Keywords

It should look something like this when finished

Make sure you only select those 2 fields, otherwise you will pull through shitloads of data

Paste in your access token , hit connect and then click OK.

Step 3

Now you’re ready to crawl!

In Screaming frog go to “mode” and select “list”.

Paste in your now clean url list and hit start.

Once finished the columns will be available with the requested data

You can now analyse this within SC or export it to excel to manipulate it further.